Part 3 — Who to trust?

This post is the third in a series of four. It finds its origin in #AutoProcure, the exciting initiative that Kelly Barner, from Buyers Meeting Point, and Rosslyn Analytics, recently launched to create “an active discussion around procurement’s relationship with automation: both what it is and what it ought to be.”

In the first post in this series, I addressed the question of “who is in control” by looking at how computers transformed the job of airplane pilots:

Control, trust and accountability in a world of machines… Pilots have had to learn to cooperate with computers in two different configurations. The chain of command is different between Boeing and Airbus:

- Boeing trusts more the pilots

- Airbus trusts more the computer

This raises the important question of accountability which I addressed in the second part by looking at another domain where machines will have to make difficult (moral) choices: self-driving cars.

I concluded that the design of the machine has a substantial impact on accountability and on ensuring that problems will not happen again…

What is at stake is trust.

By design, I am referring to “artificial intelligence and machine learning. Most people use them interchangeably. This is a mistake.”

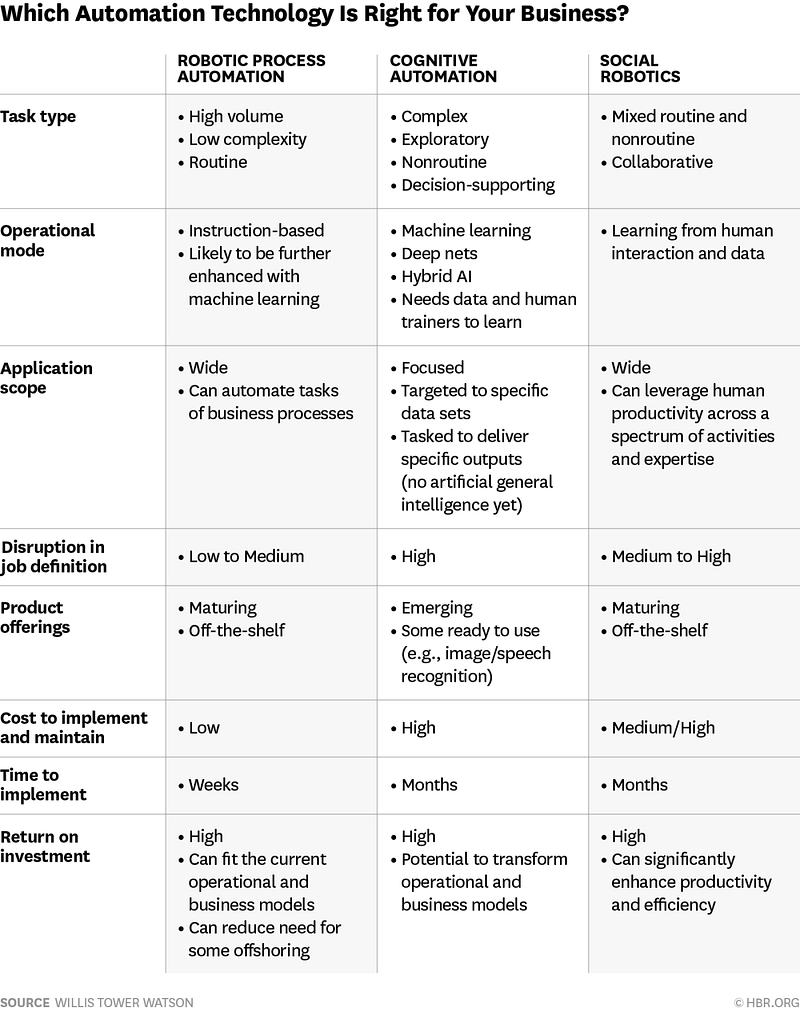

For long and for most people, the industrial applications of automation have been in the field of robotics. Factory workers replaced by robots on the assembly line. Now, technology makes its way into cognitive tasks; not just physical ones. It means that work can be automated in new ways:

The 3 Ways Work Can Be Automated, Harvard Business Review, Oct. 2016

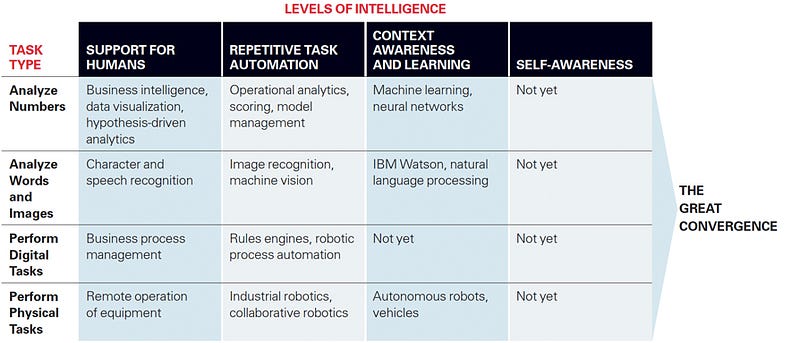

Machines are developing new levels of intelligence:

Just How Smart Are Smart Machines?, MIT Sloan Management Review, March 2016

If Procurement wants to move forward, it has to move beyond “robotics” (examples of automation of physical tasks: OCR, interfaces between systems, analytics…) and embrace Cognitive Procurement. But, Procurement organizations and providers have to be aware of the choices they make between the various options they have to build Cognitive Procurement.

Programming the machine to…

To automate work, there are two different philosophies:

- Programming the machine to do something

- Programming the machine to learn something

This is where Artificial Intelligence (AI) and Machine Learning (ML) differ. This is also where the role of humans differs.

To illustrate the difference between the two, I will go back to self-driving cars (which I also used in part 2). To simplify, there are two main ways to build a self-driving car:

- Program it with rules: if THIS then THAT…

- Teach the car how to drive by “showing” how it is done…

The 1st option, programming, implies that someone (the programmer) defines the truth (what the car should do) in every situation. This is rather impossible (see in part 2 the dilemma: “who to kill”).

This is why the 2nd option is often used for complex tasks because the computer will have to think and improvise on its own based on experience, not pre-written rules. (This is how we, humans, function)

In reality, the option that most manufacturers take is a mix of both:

“Many of the automakers and Silicon Valley companies striving to get self-driving cars onto the road have said the technology is still likely at least a half-decade away from feasibility. Even public tests like Uber’s autonomous taxis in Pittsburgh can only travel on certain roads that the company’s engineers have meticulously mapped out.

Uber and most others are working on solving essentially the same problem: how to map out roads and the various obstacles (turns, people) and rules (stop lights, speed limits), and then teach self-driving cars to accurately apply all of that information as they maneuver city streets and highways.” Source

Interestingly enough, there are initiatives, successful ones apparently, that only rely on the 2nd option: driving the car for hours and letting her learn. No programming required (related to driving). This is what George Hotz did in 2015 to develop his autonomous car in his garage. And, more recently, Nvidia.

Too good to be true?

Both options (programming or teaching) have their limits. You can only program a computer based on your current knowledge and experience. In business situations (like piloting a plane, see part 1), it is often the case that the unexpected happens.

“And again and again pilots, such those of the Lufthansa plane that almost crashed in a crosswind in Hamburg, run into new, nasty surprises that none of the engineers had predicted.” The Computer vs. the Captain. Will Increasing Automation Make Jets Less Safe?

And teaching is, sometimes, not a better solution either. Even if they have top-notch learning / deep-learning capabilities, machines learn based on what you feed them with. Garbage in, garbage out, as Microsoft learned the hard way. And even if it’s not on purpose, it can be because of bias in your understanding of the situation or because of poorly selected samples.

At the end of the day, a user depending on an intelligent machine has to trust the machine. With programming, one can rely on the trust in the programmers (the solution provider). But, when it comes to machine learning alone… This is another story. Especially, if you consider that we are at a point in the development of learning capabilities in machines where nobody (even specialists) really understands how they work!

“Consider that you’re a bank, and you have detailed transaction and credit histories of all your customers. You use a sophisticated deep learning algorithm to identify loan defaulters. Given that you’ve got a huge database of user behaviour patterns, your algorithm will probably fetch you a really good accuracy on this task, except that once you do suspect a future defaulter, you don’t exactly know what exactly caused suspicion, making justification of the prediction more difficult.” The Real Risks of Smarter Machines, Abhimanyu Dubey

This “black box” aspect is an important risk factor and I see many business scenarios in Procurement where this would be very problematic. It is difficult to know what to do when a recommendation for action comes out of the blue. The only options you have are

- to try to understand why the machine came up with that results (which defeats the purpose of using machines to enhance decision-making processes),

- to blindly trust the machine and do whatever she says.

So, it is important to not forget that people are (and will continue to be) what makes a difference!

“People will continue to have advantages over even the smartest machines. They are better able to interpret unstructured data — for example, the meaning of a poem or whether an image is of a good neighborhood or a bad one. They have the cognitive breadth to simultaneously do a lot of different things well. The judgment and flexibility that come with these basic advantages will continue to be the basis of any enterprise’s ability to innovate, delight customers, and prevail in competitive markets — where, soon enough, cognitive technologies will be ubiquitous.“ Just How Smart Are Smart Machines?, MIT Sloan Management Review, March 2016

It is important that all involved in defining, creating, and implementing the principles of Cognitive Procurement understand the choices they have and their implications. To me, the aim has to be to augment people; not to replace them. To enable people to unlock their intelligence and use their “cognitive surplus” to do things that were previously impossible.

This question of “what do we want” for the future of Procurement and work in general is what part 4 will cover!